Hi, I'm Aagam Sogani

A developer passionate about

Work Experience

ML Researcher

madSystems at University of Wisconsin Madison

July 2025 - Present

Co-Lead Software Developer, Undergraduate Researcher

University of Wisconsin-Madison MAGIC Lab

May 2025 - Present

AI Engineering Intern

Shaachi

Jan 2025 - April 2025

Software Engineering Intern

KidzJet

Oct 2024 - Dec 2024

Software Engineering Intern

Jain Center of Northern California

June 2024 - Aug 2024Projects

THVO Educational Game

An interactive educational game I helped develop at UW-Madison's MAGIC Lab, teaching logic and geometry. Funded and backed by the NSF, the game uses a webcam-based pose recognition pipeline, allowing students to physically act out concepts to enhance retention. Via MediaPipe, the software recognizes students’ poses and verifies steps in real time, unlocking solutions and feedback. Built for classroom deployment with role-based menus and lesson editors; telemetry streams are sent to Firebase for research.

- Used by 30+ middle-schoolers in a successful pilot program.

- Collected and processed over 2 GB of pose data for an NSF-funded research study.

.png)

Shaachi Sales Outreach Platform

Built the core AI backend for a platform that integrates with HubSpot to write, send, and A/B test over 10,000 personalized emails monthly, used by B2B clients including Okta.

- Deployed Llama-3 on AWS Lambda, cutting inference latency by 40% while running a fully serverless architecture for under $15/month.

- Authored the official HubSpot Marketplace app (30+ installs, 4.9-star rating), syncing up to 2M contacts bi-directionally.

- Integrated Grafana and Datadog monitors, reducing Mean Time To Resolution (MTTR) by 65%.

- Resulted in pilot customers seeing a +60% email response and +40% SQL-to-win conversion rate in 6 weeks.

Finetuning LLM for COVID-19 Stance Detection

Fine-tuned Google’s Flan-T5 Large model to classify COVID-19-related tweets (in-favor, against, neutral). Designed and trained a reproducible NLP pipeline that tackled severe class imbalance with oversampling, boosting minority-class performance and achieving a weighted F1 of 0.78 from a zero-shot baseline of 0.54.

- Built modular PyTorch + HuggingFace training scripts with error analysis and evaluation utilities.

- Improved minority-class F1 from 0.01 → 0.45 via oversampling strategies.

- Adopted modern MLOps practices: version-controlled checkpoints, documented arguments, tracked artifacts.

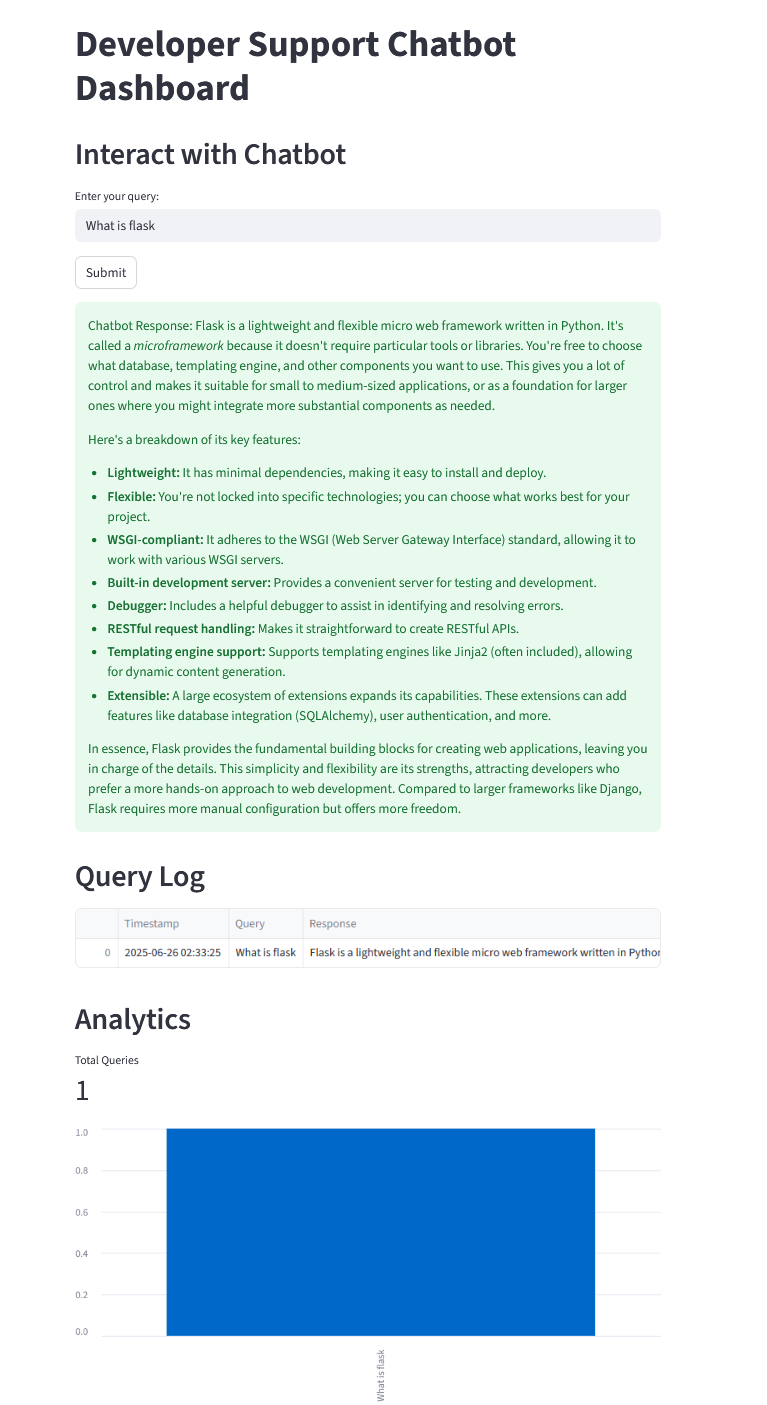

Developer-Support Chatbot

A Gemini powered, AI troubleshooting assistant to answer developer questions, featuring a pluggable Flask API backend, a VS Code extension for in-IDE use, and a Streamlit dashboard for real-time analytics. Gemini model fine-tuned on a 5,000 Q&A dataset.